The 10 Tenets of Safe AI: A Cheat Sheet for Executives

AI has the potential to bring exciting new opportunities to just about every part of our lives—but it's important to balance that excitement with a commitment to minimizing risk. As with any powerful technology, organizations must prioritize ensuring the safe development, deployment, and (if necessary) deprecation of their AI tools.

What Is Safe AI?

Safe AI refers to the design, development, and deployment of artificial intelligence technologies in a manner that minimizes harm and maximizes benefits to humanity. It incorporates practices that empower leaders to:

- Foster transparency in AI algorithms

- Respect privacy and data rights

- Help ensure accountability in AI operations

- Prevent discriminatory or biased outcomes

Safe AI requires an ongoing commitment to review and refine AI systems, with the primary focus being the wellbeing of individuals and society at large, underpinned by a strong ethical framework to guide enterprise decision-makers throughout the AI pipeline.

Why Is Safe AI Important?

The evolution and rapid upscaling of AI in common use cases today bring forth both immense possibilities and significant risks. Safe AI is not just a technical requirement but a moral imperative, ensuring that the technology we create and use adheres to ethical standards and contributes positively to society.

Ensuring safety, ethics, and responsibility in AI fosters trust among users and helps ensure compliance with rapidly evolving regulatory landscapes. Without an agile approach to Safe AI, organizations can quickly find themselves on the wrong end of a lawsuit or major public backlash.

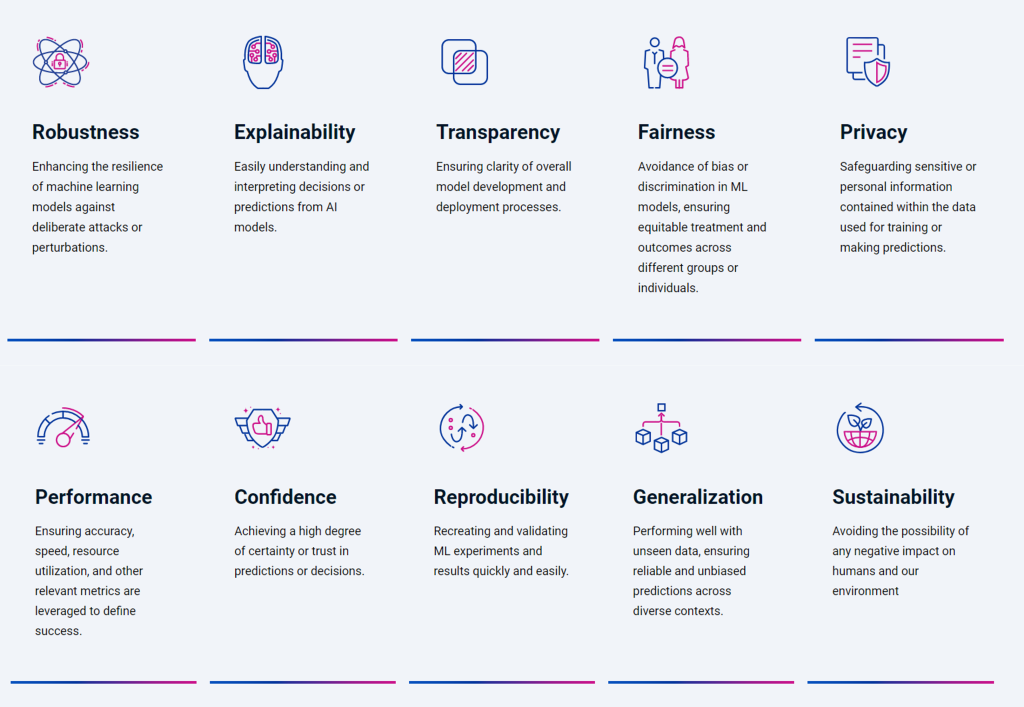

The 10 Tenets of Safe AI

With that in mind, we present the 10 Tenets of Safe AI. These tenets, informed by the Institute of Electrical and Electronics Engineers (IEEE) Code of Ethics and the Microsoft Responsible AI Standard, are intended to offer a comprehensive guide to navigating the complex landscape of AI safety.

Due to a few key commonalities between each of these principles, it can be helpful to look at them through the lens of these three categories: ethical integrity, system robustness, and user trust and safety.

1) Ethical Integrity

Ethical integrity is of paramount importance in AI and includes the principles of fairness, transparency, and explainability.

- Fairness helps ensure that AI models provide impartial, balanced outcomes across diverse user groups, fostering a sense of justice and equality

- Transparency offers clear insights into every stage of AI development and deployment. This encourages accountability, ethical compliance, and responsibility and establishes trust among users and stakeholders alike.

- Explainability is all about making AI understandable to users. It demystifies AI decisions, empowering users to comprehend and interpret the models' outputs more effectively.

2) System Robustness

System robustness is another pivotal part of Safe AI, this time focusing on the resilience and performance of AI systems.

- Robustness enhances the resilience of models to support steadfastness and reliability under a variety of conditions. A system is robust if is capable of withstanding both intentional and unintentional disruptions.

- Performance of an AI system is critical for organizations to maintain. If performance falls off, operational speed, accuracy, and resource utilization can suffer a result

- Reproducibility is key to allowing the recreation and validation of machine learning (ML) experiments and their results with ease and accuracy. This helps to ensure scientific integrity and reliability in AI research and development.

3) User Trust and Safety

User trust and safety centers around the principles of privacy, confidence, generalization, and sustainability.

Privacy regulations vary across regions and can carry substantial legal and reputational penalties if violated. A commitment to privacy protection is the shield that protects use information, supports the confidentiality and security of data, and prevents costly data breaches.

Confidence is critical for building reliance on AI technologies and establishing a foundation of trust between users and AI systems. It is the pillar that supports user acceptance and usage of the AI models you deploy.

Generalization is the key to developing versatile and reliable AI applications and involves ensuring the adaptability of AI models when presented with different use cases across diverse contexts.

Sustainability serves to promote the development of environmentally friendly and socially responsible AI solutions by focusing on the long-term viability and environment footprint of AI technologies.

Maximize Productivity, Minimize Risk

The purpose of these 10 tenets is to guide businesses toward the creation of robust, fair, and transparent AI for the benefit of all people. In a world where AI is inseparably intertwined with our daily lives, adherence to these principles is not just a benefit—it is a business imperative.

Centific's Safe AI Consulting Services team believes in the transformative power of AI and is committed to ensuring its safe and beneficial deployment.

That’s why we’ve developed the Safe AI Maturity Model for outlining the stages organizations undergo in implementing Safe AI, from initial awareness to final transformation. This roadmap offers an invaluable reference point for organizations to assess their current standing and advance toward the comprehensive adoption of Safe AI principles.

Learn more about Safe AI principles and the Centific Safe AI Maturity Model.